I’m using OneDrive included in my Office 365 subscription to store a copy of my local backup in the cloud. My subscription provides 1 TB cloud storage for every user, which is quite a decent amount of storage space, at least for my needs, so I was really surprised when I received the warning that my OneDrive is full. How can that happen, my backup data is only 0.5 TB locally, how can that consume twice as much space in OneDrive?

What takes up so much storage space?

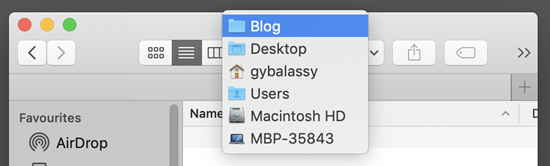

I sync tons of data to OneDrive, so first I had to understand what actually consumes the most storage space. OneDrive by default does not show the size of folders, but here is the trick to view that:

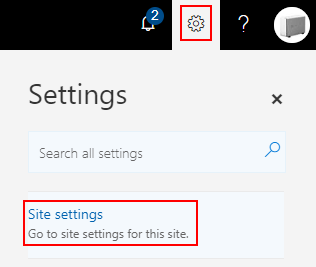

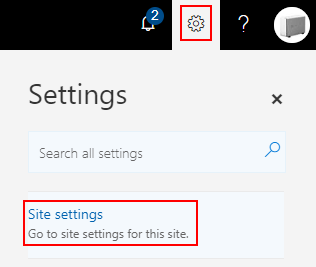

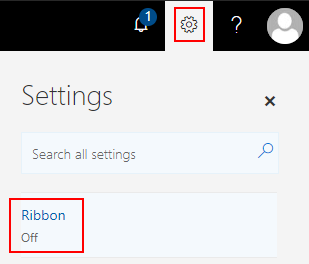

Click the Gear on the top right corner, than click Site settings on the sidebar:

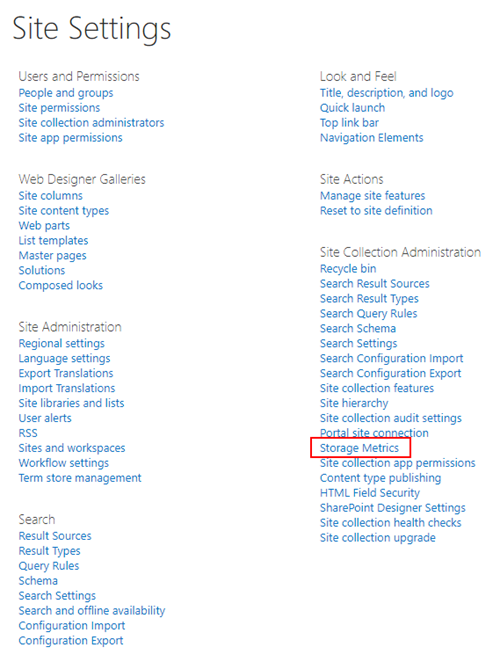

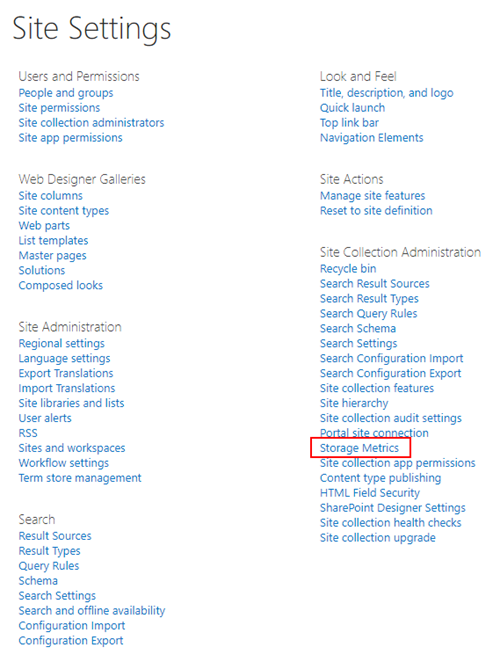

If you ever administered SharePoint, the list of menu items will be familiar to you: your OneDrive for Business storage is actually a SharePoint site collection. Click Storage Metrics in the right column:

Here you can see all your files and even the system folders with a nice inline bar, so you can immediately see which folder consumes the most space. Actually all your data is in the Documents folder:

By clicking the folder names you can dive deeper and see subfolders and files. Note that the Total Size includes the following:

- Deleted files in Recycle Bin

- All versions of the file

- Metadata associated with the file

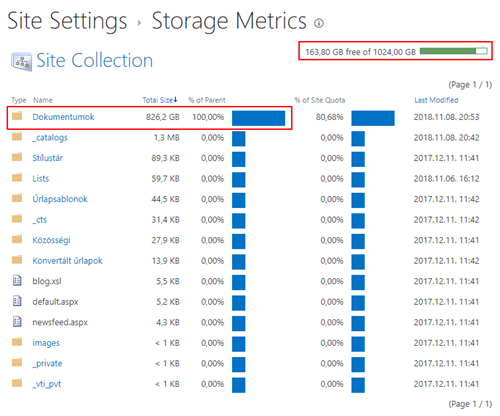

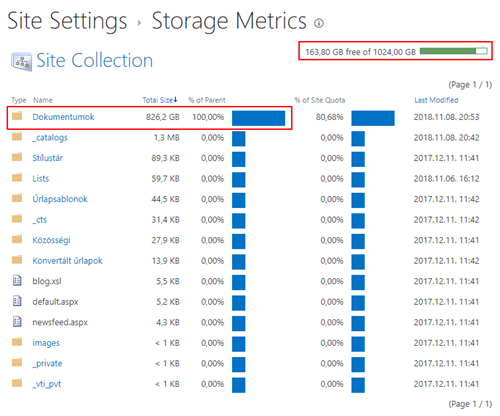

To check the versions you can click the Version History link on file level:

Here you can see that OneDrive for Business does have file versioning enabled by default, so when you overwrite a file, the previous version remains there and consumes storage space from your quota. By clicking the Delete All Versions link you can get rid of the old versions:

Unfortunately you have to do it file-by-file, there is no UI option to delete the old versions of all files from your subscription.

Recycle Bin

As a first step I wanted to check whether my Recycle Bin contains any files, because if it does, it counts into my quota.

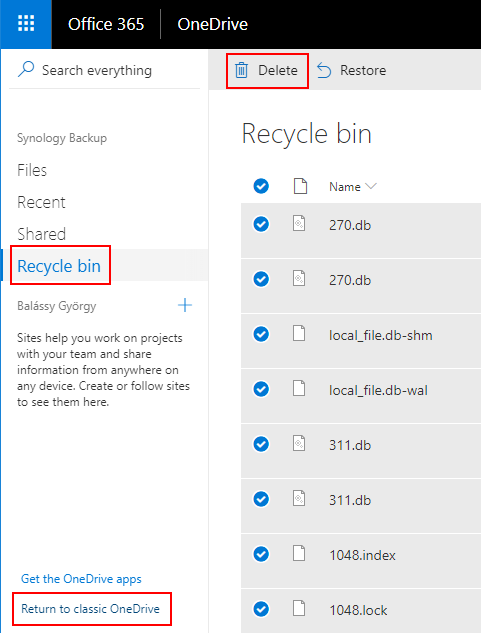

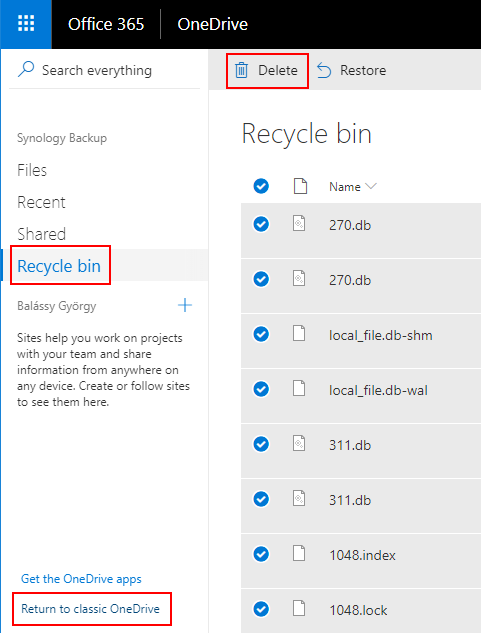

So I opened my OneDrive in the browser and clicked Recycle bin in the left menu:

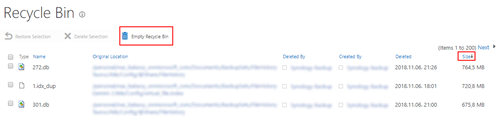

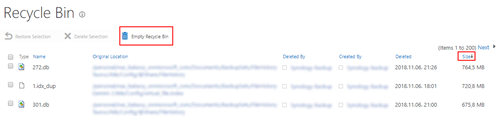

Unfortunately it does not show how big are those files in the Recycle Bin, but clicking Return to classic OneDrive in the bottom left corner you can get a different view, where you can sort the file by Size, and Empty the Recycle Bin with a single click:

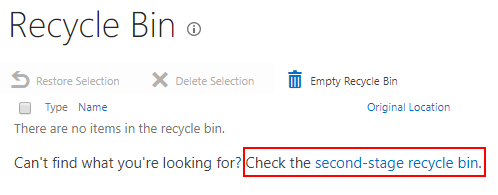

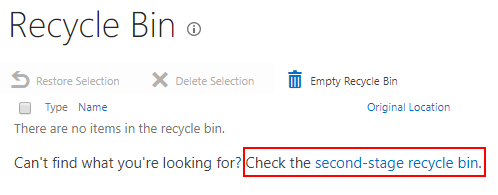

Do you think you are done? Not yet! Recycle bin is there to protect you from accidental file deletion, but there is a second-stage recycle bin to protect you against accidental recycle bin emptying:

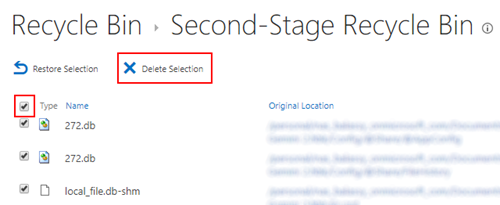

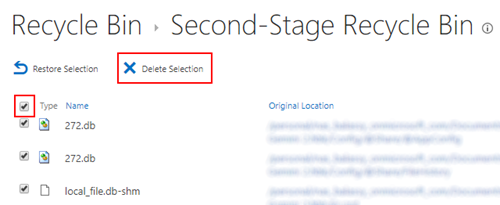

So click the second-stage recycle bin link (which is actually the admin level recycle bin on the site collection) to see that your files are still there:

Click the checkbox at the top of the first column to select all files, then click Delete Selection. Bad news: the list is paged, and you have to do it page by page 😦

Disabling document versioning in OneDrive for Business

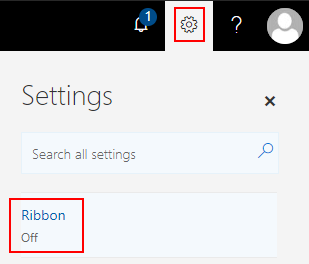

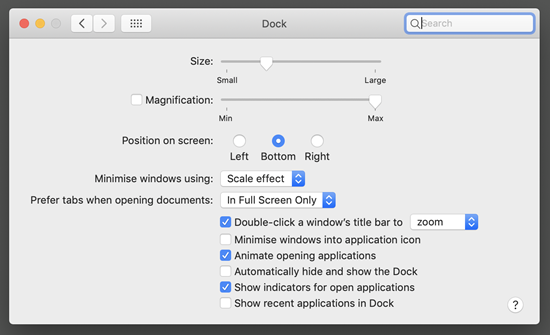

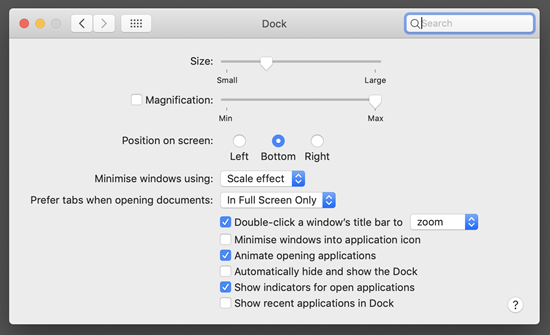

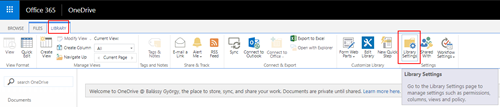

Because your OneDrive for Business is actually a SharePoint site collection, and all your files are in the Documents folder which is actually a SharePoint document library, you can disable versioning for all your documents by disabling versioning in that single document library. To find that configuration option first click Return to classic OneDrive in the bottom left corner, then the Gear on the top right corner, and then click Ribbon in the right sidebar to view the ribbon:

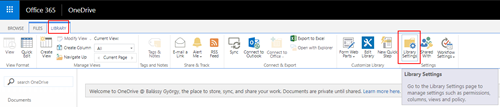

Expand the ribbon by clicking the Library tab, and then click Library Settings towards the right end of the ribbon:

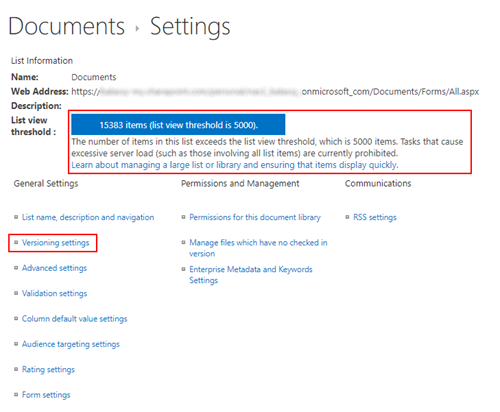

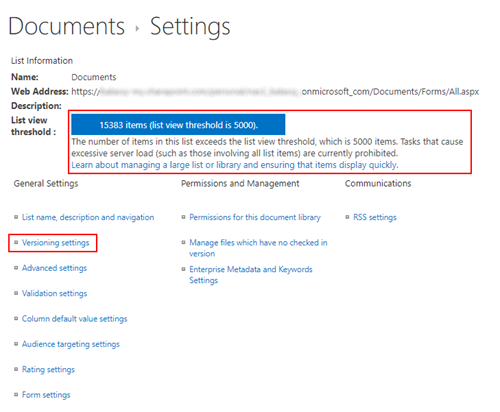

The settings page of the document library contains several useful option and information:

First, if you have more than 5000 files in your OneDrive (like I do), a List view threshold warning is shown on this page. This will be important later, because as the description says, you cannot do operations on that many files at once.

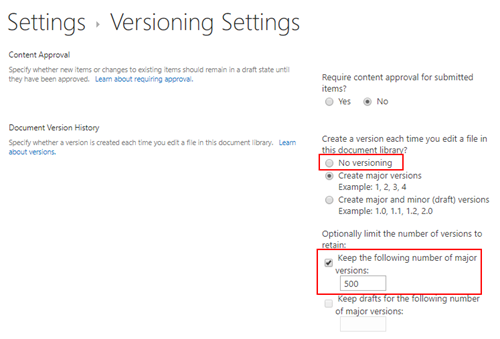

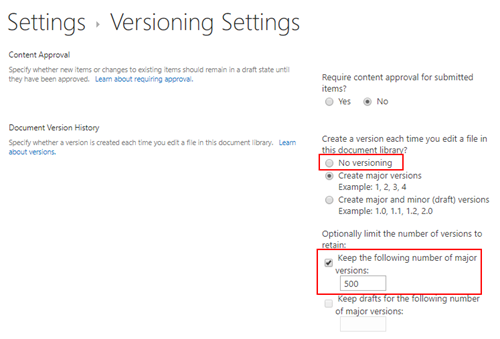

Second, the Versioning settings link leads to the page where you can disable versioning, or limit the number of versions retained:

Both option can help you reduce the storage space needed for your files. Note however that turning off versioning here does not delete the existing versions of your files!

Delete old document versions of all files

Unfortunately there is no option on the UI to delete the old versions of all your documents with a single click, but thankfully SharePoint has an API, so you can do it programmatically.

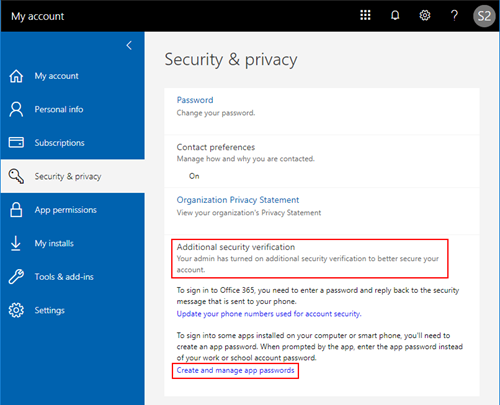

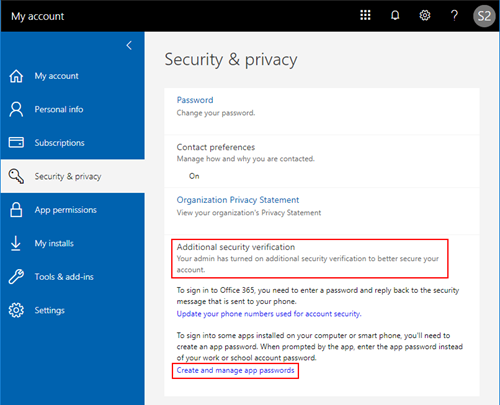

If you have multifactor authentication enabled (you do, right?) then the first step is to generate an application password. To do that click your avatar in the upper right corner, then click the My account link, or navigate directly to https://portal.office.com/account/.

On the left menu click Security & privacy, then on the middle pane click Additional security verification. It does not look like a menu item, but it actually is, and expands the pane to show new items:

Click the Create and manage app passwords link and use that page to generate a unique password for your app.

The next step is to download the SharePoint Online SDK which installs a set of DLLs into the C:\Program Files\Common Files\microsoft shared\Web Server Extensions\16\ISAPI folder. You can use these DLLs with the PowerShell scripts published by Arleta Wanat to the TechNet Script Center:

These are very handy scripts, note however that they have a limitation: they cannot deal with more than 5000 files and you can receive the following exception:

“Microsoft.SharePoint.Client.ServerException: The attempted operation is prohibited because it exceeds the list view threshold enforced by the administrator.”

Thankfully CAML has the power to limit the number of returned items using RowLimit and add paging, however it does not always work if you want to run a recursive query. But the option to run a separate CAML query to each and every folder is working.

I implemented my final solution in a good old .NET console application using the SharePoint client-side object model. The application executes the following steps:

- Connects to your OneDrive for Business or SharePoint site collection.

- Finds the Documents document library.

- Iterates through the specified subfolder paths in the document library.

- It runs CAML queries in every folder to retrieve the documents (files). In a single query maximum 100 documents are retrieved, and the query is executed again and again until all documents are processed.

- If a document has multiple versions, they are deleted.

You can find the code in Github and customize it according to your needs: https://github.com/balassy/OneDriveVersionCleaner

![5d-penrose-triangle_thumb[1] 5d-penrose-triangle_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-penrose-triangle_thumb1_thumb.png?w=200&h=174)

![5d-openscad_thumb[1] 5d-openscad_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-openscad_thumb1_thumb.png?w=550&h=316)

![5d-cura-expert-config_thumb[1] 5d-cura-expert-config_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-cura-expert-config_thumb1_thumb.png?w=550&h=612)

![5d-triangle_thumb[1] 5d-triangle_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-triangle_thumb1_thumb.jpg?w=550&h=367)

![5d-triangle-with-pencil_thumb[1] 5d-triangle-with-pencil_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-triangle-with-pencil_thumb1_thumb.jpg?w=550&h=494)

![5d-cura-preview_thumb[1] 5d-cura-preview_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-cura-preview_thumb1_thumb.png?w=550&h=335)

![5d-cubes_thumb[1] 5d-cubes_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-cubes_thumb1_thumb.jpg?w=550&h=401)

![5d-cura-stairs_thumb[1] 5d-cura-stairs_thumb[1]](https://gyorgybalassy.files.wordpress.com/2016/04/5d-cura-stairs_thumb1_thumb.png?w=550&h=523)